Midterm Concepts

OLS Regression

Bivariate data and associations between variables (e.g.

Apparent relationships are best viewed by looking at a scatterplot

Check for associations to be positive/negative, weak/strong, linear/nonlinear, etc

Correlation coefficient (

Correlation does not imply causation! Might be confounding or lurking variables (e.g.$Z$) affecting

Population regression model

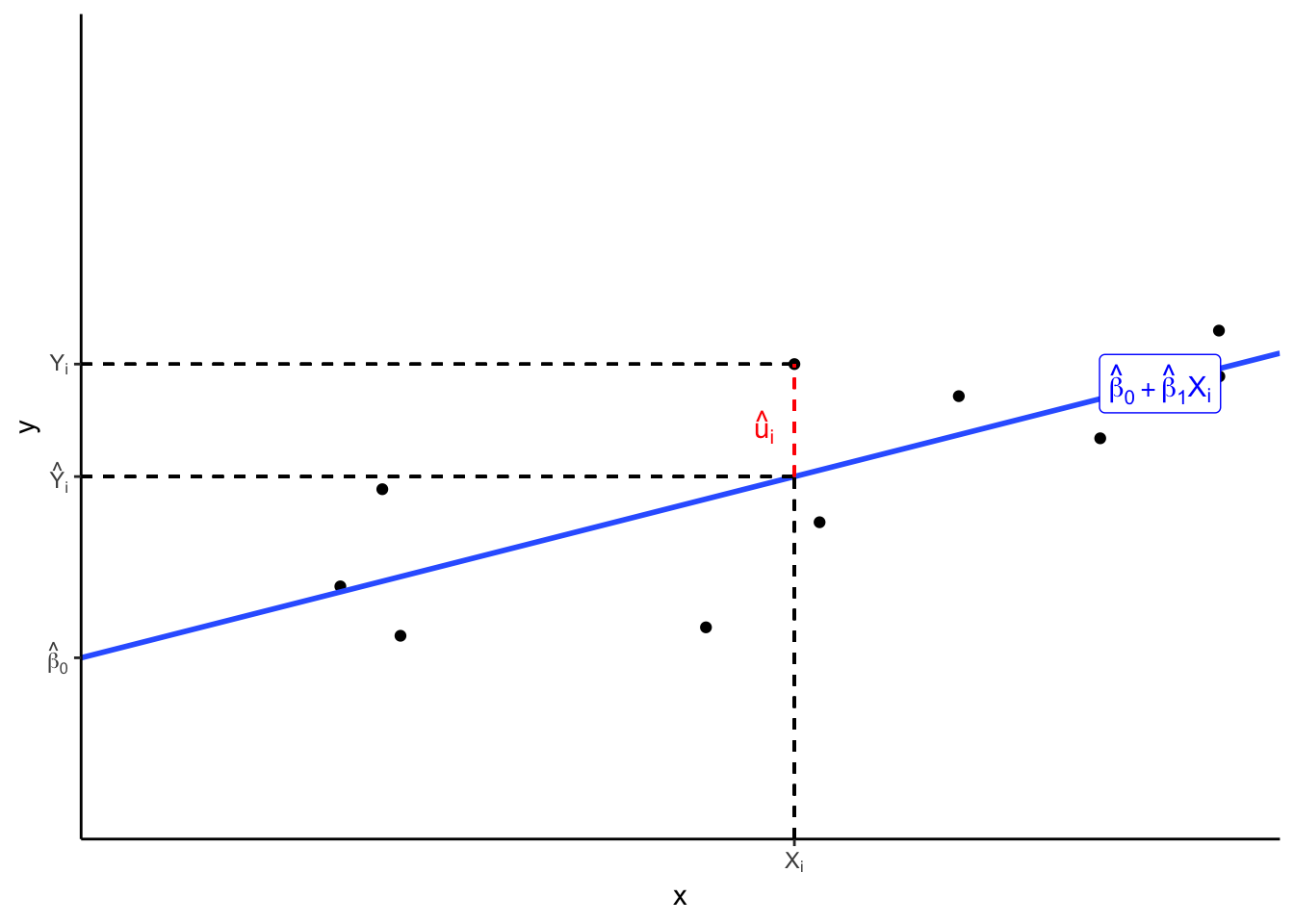

Ordinary Least Squares (OLS) regression model

OLS estimators

Minimize sum of squared errors (SSR)

OLS regression line

Measures of Fit

Where

Standard error of the regression (or residuals), SER: average size of

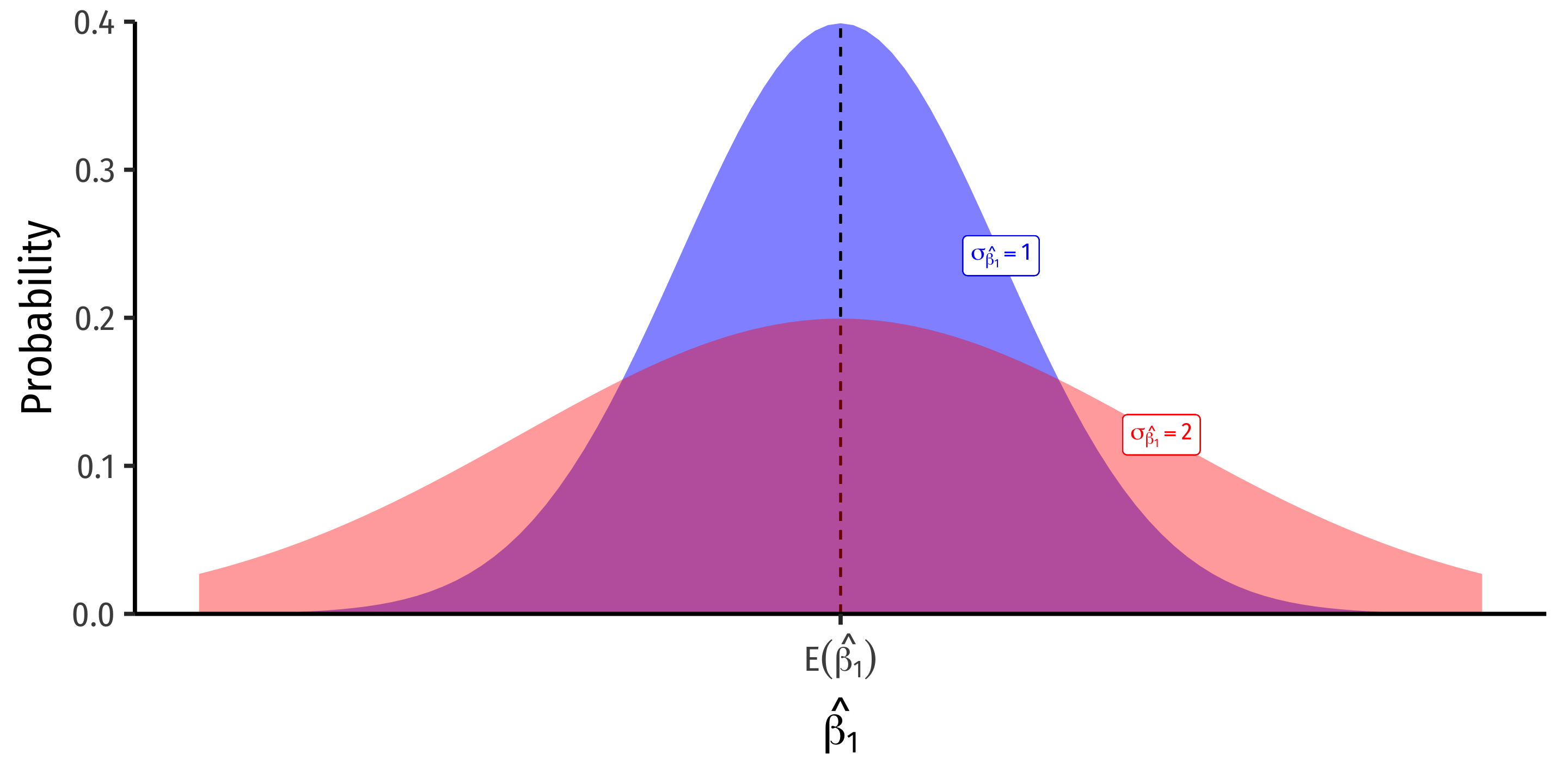

Sampling Distribution of

Mean of OLS estimator

equivalently, knowing

if

If

Can measure strength and direction (+ or -) of bias

Note if unbiased,

Assumptions about u

The mean of the errors is 0

The variance of the errors is constant over all values of

Errors are not correlated across observations

There is no correlation between

Precision of OLS estimator

Affected by three factors:

Model fit, (SER)

Sample size,

Variation in

Heteroskedasticity & Homoskedasticity

Homoskedastic errors (

Heteroskedastic errors (

Heteroskedasticity does not bias our estimates, but incorrectly lowers variance & standard errors (inflating $t$-statistics and significance!)

Can correct for heteroskedasticity by using robust standard errors

Hypothesis Testing of

Two sided alternative

One sided alternatives

Compare

Confidence intervals (95%):